Here are some simulation results for rate adjust. The conditions are that the playback device runs 0.01 % slower than capture, and that the level is 1200 when the simulation starts. There is some random noise added to the buffer level measurements. The target level is 1000. After half the simulation has run, the playback rate starts varying, which is modelled as a slow sine with a 150 s period and amplitude of 0.003%.

Note that some sims run until 1200s while others stop at 600s. The blue curve is the rate adjust divided by a million, and green is the measured buffer level (fuzzy because of the added noise).

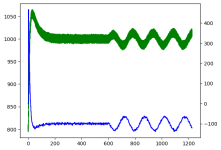

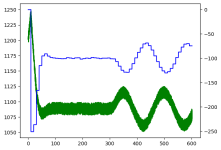

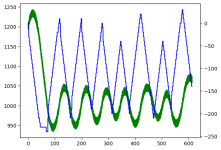

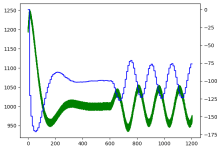

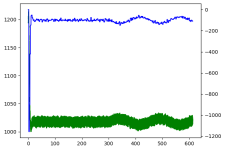

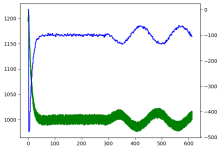

The old controller, used in v1.0 and v2.0 for all backends except Alsa:

10 second adjust period:

2 second adjust period:

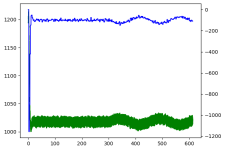

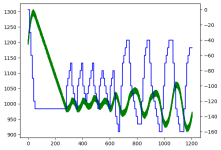

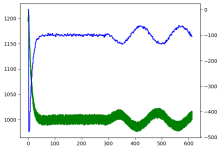

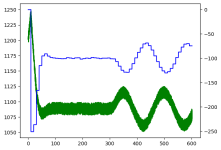

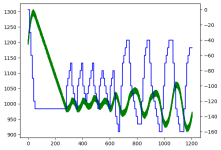

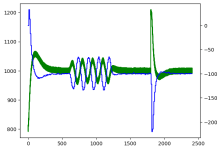

The new controller, used for Alsa in v2.0:

10 second adjust period:

and 2 second period:

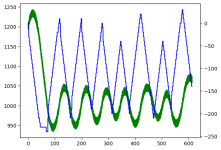

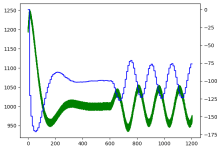

Here is the current leader:

10 second adjust period:

2 second period:

The v1 controller makes larger adjustments than needed, especially at low adjust periods. It's a plain P-controller, so it never reaches the target level of 1000.

v2 is supposed to be a smarter controller that makes small adjustments to find the optimal steady state rate. Unfortunately it has a tendency to never quite settle and instead hunt around the target. This is not a simulation problem, it often does this in reality too. The adjustments are small, but still unwanted. It also react slowly at the start, so that the buffer level has time to increase a bit more before it starts decreasing towards the target.

v3 is a PI controller with lower P-gain than the one in v1, and the I-part means it is able to reach the target. The rate changes while fixing the initial offset are about half as large as for v1.

For fun I tried a PID controller as well. It can give a somewhat nicer step response at the start but it adds too much noise to be worth it.

Note that some sims run until 1200s while others stop at 600s. The blue curve is the rate adjust divided by a million, and green is the measured buffer level (fuzzy because of the added noise).

The old controller, used in v1.0 and v2.0 for all backends except Alsa:

10 second adjust period:

2 second adjust period:

The new controller, used for Alsa in v2.0:

10 second adjust period:

and 2 second period:

Here is the current leader:

10 second adjust period:

2 second period:

The v1 controller makes larger adjustments than needed, especially at low adjust periods. It's a plain P-controller, so it never reaches the target level of 1000.

v2 is supposed to be a smarter controller that makes small adjustments to find the optimal steady state rate. Unfortunately it has a tendency to never quite settle and instead hunt around the target. This is not a simulation problem, it often does this in reality too. The adjustments are small, but still unwanted. It also react slowly at the start, so that the buffer level has time to increase a bit more before it starts decreasing towards the target.

v3 is a PI controller with lower P-gain than the one in v1, and the I-part means it is able to reach the target. The rate changes while fixing the initial offset are about half as large as for v1.

For fun I tried a PID controller as well. It can give a somewhat nicer step response at the start but it adds too much noise to be worth it.

Great test, thanks a lot! I like the v3 10s result as it has small overshoot in the rate adjustment. The rate adjustment is "innocuous" in non-resampling chains. In async resampling it directly influences (in fact damages) the resampled signal.

IMO it's not critical to reach the target level exactly, it's just a number nobody cares much about to be exact, unless some fixed latency were required e.g. for video adjustment for lip-sync (but it consists of other delays, not just the buffer level - e.g. processing time which may vary depending on the CPU load). Maybe we can ask what is important. IMHO (unless a specific stable latency is critical):

Henrik, please can you test a scenario where the playback is slower, but the initial buffer level is below the target? IMO the PI regulator will try to even more speed up the capture to make up for the buffer deficit, but that will further increase the rate diff. Maybe a bit of the D could be really usefull in these cases - if the buffer level keeps going in one direction (diff, derivation), the rate is clearly mismatched. IMO the primary goal should be to find the rate equilibrium fast (so that the buffer level gets stable, under control), while the adjustment to the required target level (buffer level is the rate difference integrated over time) is not so critical, it can take longer. I know it's easy to say but quite difficult to do

IMO it's not critical to reach the target level exactly, it's just a number nobody cares much about to be exact, unless some fixed latency were required e.g. for video adjustment for lip-sync (but it consists of other delays, not just the buffer level - e.g. processing time which may vary depending on the CPU load). Maybe we can ask what is important. IMHO (unless a specific stable latency is critical):

- no large peaks in rate adjust as these directly impact the signal when async resampling. Rate adjust as smooth as possible (in absolute numbers)

- no large overshoots of buffer level - to minimize the risk of buffer underflow at small target levels (small safety margin). This goes against the previous condition as small initial peak in rate adjust means the initial rate difference will take longer to be "tamed", while the buffer level continues going in the opposite direction than desired.

Henrik, please can you test a scenario where the playback is slower, but the initial buffer level is below the target? IMO the PI regulator will try to even more speed up the capture to make up for the buffer deficit, but that will further increase the rate diff. Maybe a bit of the D could be really usefull in these cases - if the buffer level keeps going in one direction (diff, derivation), the rate is clearly mismatched. IMO the primary goal should be to find the rate equilibrium fast (so that the buffer level gets stable, under control), while the adjustment to the required target level (buffer level is the rate difference integrated over time) is not so critical, it can take longer. I know it's easy to say but quite difficult to do

The only time when a rate correction is audible is in the acoustic die away of 'sine wave' type natural instruments - primarily piano - but also clarinet and harp marimba etc. So, how about timing the correction to coincide with a musical impulse? This is where 99% of edits take place. No idea if this is feasible!

Henrik, thanks for the test. IIUC the higher the initial difference between buffer level and the target, the higher the overshoot. But the overshoot itself has no relation to the actual rate difference - it's caused by the initial difference. The current code tries to keep the initial difference minimal with the initial sleep https://github.com/HEnquist/camilla...44ebaec26e4f9e91a/src/alsadevice.rs#L111-L113 (thanks for the PR inclusion, it was exactly for this discussion  ).

).

But after an xrun (which in some configs happens at every start/unpausing - as Michael reported above - we may discuss it later, I have seen it in my tests too) the same sleep occurs https://github.com/HEnquist/camilla...f842144ebaec26e4f9e91a/src/alsadevice.rs#L108 , but very often an additional chunk is already waiting in the queue and the buffer level right after xrun ends up at (target-isch + 1 chunk). This will cause an even higher overshoot in the rate adjust - even though the rate adjust itself may have already been perfectly stabilized at real correct capture/playback ratio before the xrun (the xrun could have been caused by delayed processing due to some CPU peak, CPU frequency transition latency etc.). In cases where the exact latency is not critical (and these are quite common), it may make sense to keep the stabilized rate adjust and only slowly get back to the target level, by small controlled adjustments of the rate adjust. There may be no need to push hard for the target, if the buffer is nicely stable. The target level is basically "just" some arbitrarily chosen value, whereas the stabilized rate adjust is given - determined by the actual capture/playback HW and is fixed, for most of the time.

Maybe there are several inputs to the control

Some use scenarios would prefer the first (fixed latency for AV lip sync, needs aggresive change to get back fast to the target level), some the second one (probably low-latency with small buffers, avoiding aggressive changes and large buffer fluctuations).

Adding D to the PID causes fluctuations. IMO it's caused by the fluctuations in the buffer level which are not caused by the rate adjust but by the OS timing + processing delays - yet they have direct impact on the rate adjust through the D term, causing fluctuations/noise. IMO these short-term fluctuations should be ignored, only the trend, not the momentary difference (x( n ) - x(n-1)) - adding some low-pass filter? The low-pass filter is already provided by the averager, but that also determines the frequency of running the control adjustment which may be needed more often than the low-pass filter decay in the second input to the controller. Maybe the averager depth and the adjustment check period could be independent, maybe a separate averager/LPF for the D term...

Perhaps a weighted two-input PID with some built-in safety "sidekicks" would be a way to go. It may support both scenarios above, by changing weights to the respective input variable.

Just some thoughts for discussion. Controlling this time-critical system also being influenced by external factors is no easy task

But after an xrun (which in some configs happens at every start/unpausing - as Michael reported above - we may discuss it later, I have seen it in my tests too) the same sleep occurs https://github.com/HEnquist/camilla...f842144ebaec26e4f9e91a/src/alsadevice.rs#L108 , but very often an additional chunk is already waiting in the queue and the buffer level right after xrun ends up at (target-isch + 1 chunk). This will cause an even higher overshoot in the rate adjust - even though the rate adjust itself may have already been perfectly stabilized at real correct capture/playback ratio before the xrun (the xrun could have been caused by delayed processing due to some CPU peak, CPU frequency transition latency etc.). In cases where the exact latency is not critical (and these are quite common), it may make sense to keep the stabilized rate adjust and only slowly get back to the target level, by small controlled adjustments of the rate adjust. There may be no need to push hard for the target, if the buffer is nicely stable. The target level is basically "just" some arbitrarily chosen value, whereas the stabilized rate adjust is given - determined by the actual capture/playback HW and is fixed, for most of the time.

Maybe there are several inputs to the control

- distance to target level going to zero => target level reached

- trend of the buffer level change going to zero => correct rate adjust reached

- observing some minimal buffer level - safety measure, would always kick in to avoid an xrun (= failure of the controlled loop), regardless the other requirements

Some use scenarios would prefer the first (fixed latency for AV lip sync, needs aggresive change to get back fast to the target level), some the second one (probably low-latency with small buffers, avoiding aggressive changes and large buffer fluctuations).

Adding D to the PID causes fluctuations. IMO it's caused by the fluctuations in the buffer level which are not caused by the rate adjust but by the OS timing + processing delays - yet they have direct impact on the rate adjust through the D term, causing fluctuations/noise. IMO these short-term fluctuations should be ignored, only the trend, not the momentary difference (x( n ) - x(n-1)) - adding some low-pass filter? The low-pass filter is already provided by the averager, but that also determines the frequency of running the control adjustment which may be needed more often than the low-pass filter decay in the second input to the controller. Maybe the averager depth and the adjustment check period could be independent, maybe a separate averager/LPF for the D term...

Perhaps a weighted two-input PID with some built-in safety "sidekicks" would be a way to go. It may support both scenarios above, by changing weights to the respective input variable.

Just some thoughts for discussion. Controlling this time-critical system also being influenced by external factors is no easy task

Last edited:

I have tried this. It helps to reduce noise, but at the same time this delays the action of the D. I have not managed to find a set of parameters where I feel that the D improves things.through the D term, causing fluctuations/noise. IMO these short-term fluctuations should be ignored, only the trend, not the momentary difference (x( n ) - x(n-1)) - adding some low-pass filter?

This can be solved by using a crossbeam-channel instead of the one from the standard library. It has a method to get the number of waiting messages. Any waiting chunk should be added to the buffer level.but very often an additional chunk is already waiting in the queue and the buffer level right after xrun ends up at (target-isch + 1 chunk).

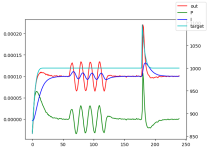

Right now I'm experimenting with something that looks kinda promising. I use the PI-controller, but added some logic to for the start-up that makes it smoothly ramp the target level from the current level to the desired one. It also triggers this ramp if the level somehow ends up far away from the target (with a configurable limit for what is considered far away).Perhaps a weighted two-input PID with some built-in safety "sidekicks" would be a way to go. It may support both scenarios above, by changing weights to the respective input variable.

Here is what this looks like, with a 10 second adjust period. I added a step to the level after three quarters to trigger a new ramp. The ramp gives a much smoother return to the target, without any big overshoots.

And the internals from the controller (the target uses the scale on the right side):

That is a good idea, but quite hard to accomplish.The only time when a rate correction is audible is in the acoustic die away of 'sine wave' type natural instruments - primarily piano - but also clarinet and harp marimba etc. So, how about timing the correction to coincide with a musical impulse? This is where 99% of edits take place. No idea if this is feasible!

@HenrikEnquist

This is a great tool, and I used it for digital frequency division in macOS and received very good results.

I am not familiar with how this software is implemented. Here is a suggestion for reference. Could you compare if using EMD(Empirical Mode Decomposition) instead of FFT for transformation will improve the output, especially the transient effect? Thanks for the work!

This is a great tool, and I used it for digital frequency division in macOS and received very good results.

I am not familiar with how this software is implemented. Here is a suggestion for reference. Could you compare if using EMD(Empirical Mode Decomposition) instead of FFT for transformation will improve the output, especially the transient effect? Thanks for the work!

I've posted the first beta version of ATC. If anyone dares to test it...

ATC is a utility program designed for Lyrion Music Server and CamillaDSP, aimed at minimizing the number of audio rendering stages.

This is achieved by transferring digital volume control from LMS to CamillaDSP and adjusting sample rate in CamillaDSP. Optional resampling profiles can be configured based on the track sample rate.

Amplitude control may incorporate features such as replay gain and lessloss using fixed coefficient values to reduce rounding errors when 16-bit audio is truncated to 24-bit.

Code and instruction here: https://github.com/StillNotWorking/LMS-helper-script/tree/main/atc

Thanks to @HenrikEnquist for steering me in the right direction in understanding how pyCamilla make use of Python dictionaries.

ATC is a utility program designed for Lyrion Music Server and CamillaDSP, aimed at minimizing the number of audio rendering stages.

This is achieved by transferring digital volume control from LMS to CamillaDSP and adjusting sample rate in CamillaDSP. Optional resampling profiles can be configured based on the track sample rate.

Amplitude control may incorporate features such as replay gain and lessloss using fixed coefficient values to reduce rounding errors when 16-bit audio is truncated to 24-bit.

Code and instruction here: https://github.com/StillNotWorking/LMS-helper-script/tree/main/atc

Thanks to @HenrikEnquist for steering me in the right direction in understanding how pyCamilla make use of Python dictionaries.

@TNT - On RPi-OS Lite ALSA will do resampling if CamillaDSP sample rate and track playing in Squeezelite do not match.

To the best of my knowledge, if replay gain is activated or digital volume is used for the player, Squeezelite forwards these tasks to ALSA. While Squeezelite can handle volume adjustments itself, my understanding is that it prefers ALSA to manage this.

My thinking both of these tasks CDSP will do better.

To the best of my knowledge, if replay gain is activated or digital volume is used for the player, Squeezelite forwards these tasks to ALSA. While Squeezelite can handle volume adjustments itself, my understanding is that it prefers ALSA to manage this.

My thinking both of these tasks CDSP will do better.

As far as I know, EMD is useful for analyzing signals. All examples I have seen use it to analyze isolated sounds, not complex music material. I don't really see how it could be used for filtering. Have you seen it used for this?Could you compare if using EMD(Empirical Mode Decomposition) instead of FFT for transformation will improve the output, especially the transient effect?

Good to hear about your good results. Maybe RPi5 with its fast RAM would handle even chunk size 64.

I've been using 64 chunk size, 192 target level, 10 second adjust interval at 48 kHz for the last few days with the RPi5 + S2 Digi + DAC8x setup and it works great. Latency is under 10 ms. It seems like target level = 3 x chunk size works very well and I don't see any of the weird run downs in buffer level that I did with target level = 1 x chunk size.

Michael

I think the additional chunk arrives when playback is in that sleep, i.e. it cannot be accounted for in the sleep time calculation as it's not known before. But it could be checked after the sleep and handled somehow.This can be solved by using a crossbeam-channel instead of the one from the standard library. It has a method to get the number of waiting messages. Any waiting chunk should be added to the buffer level.

I like the idea of the variable target level for the algorithm a lot. IMO it could allow reaching both the goals.Right now I'm experimenting with something that looks kinda promising. I use the PI-controller, but added some logic to for the start-up that makes it smoothly ramp the target level from the current level to the desired one. It also triggers this ramp if the level somehow ends up far away from the target (with a configurable limit for what is considered far away).

The buffer level measured after start/restart could be kept as working target until correct samplerate adjust was reached (i.e. the buffer level gets stable). Maybe the last known rate adjust could be used which may speed up the this stage after xrun caused by random delayed delivery of chunks (CPU load burst) (the previous adjust was already correct). After this stage the slow ramp of the working target towards the requested target could start.

Just a side note: In your simulations - I wonder if testing the fluctuating true rate adjust reflects reality. IMO the true rate adjust is basically fixed and does not change much in time, for the given input/output devices. What changes systematically is the buffer level (after xruns, delayed chunk delivery, etc.). IIUC in your tests you have the buffer level fluctuating with a random noise, i.e. zero mean. Would it make sense to test the buffer variations to be systematic instead of the true rate adjust variation? IMO it may better reflect what's going on in CDSP.

I am thinking about the user-configurable target level. It does not define the overall latency, users typically do not understand what it does (it unavoidably is quite complex ) and sometimes set it suboptimally. I understand that there must be some working target for the control algorithm, the question is how it's defined.

If no latency requirement were defined, I could imagine the initial buffer level (after start/restart) with some lower limit (e.g. 1 chunksize minimum) could be used, basically doing only the first control stage (reaching stable rate adjust) described above, without ramping to the pre-configured value. The configured target would be used for the start /restart sleep calculation. Just giving it for discussion, whether it would have any benefit.

But what could be very useful is support for defining/maintaining overall latency. E.g. CDSP would be configured to run with 20ms (to give enough safety margin) overall latency capture buffer -> processing -> playback buffer. With video player or AVR configured to add 20ms fixed delay (plus the player-side audio buffer delay) to video the lipsync would be preserved, even after xruns. No matter if CDSP ran internally via loopback, or separately on some ARM board via USB audio. This already goes into realm of pipewire with fixed latency, RTP with included timestamps, etc... But maybe it would not be very complicated:

* Playback buffer would be set large enough to fit the whole required latency. The maximum buffer size has no further impact, just RAM consumption.

* Chunksize could be defined by user, as is now (later maybe set automatically to fit the required latency optimally)

* IIUC the overall latency is capture time (1 chunktime) + chunk resampling/processing time (the creation timestamp is already in the AudioChunk struct) + playback buffer fill = working target.

* Start/restart playback sleep = the target level time would be then calculated as [the required latency - capture time (1 chunktime) - the first chunk resampling/processing time]

* Of course the required latency would have to be checked for feasability with the requested chunksize, reasonable working target level, and the measured first chunk processing time - e.g. a smaller chunk could be suggested

If the video player used audio clock as its master clock (like e.g. mplayer does) or adjusted the audio stream to fit the video clock, IMO the above chain would keep lipsync. If not, there are no timestamps in the stream and that would require some side channel or RTP capture backend. But IMO just the fixed latency of the chain itself (skewed by the playback clock eventually) could be quite useful.

The required latency may be e.g. also specified as "minimal" - then target level would be set e.g. to 1 chunksize.

Just thoughts for discussion

Last edited:

I think this should not be made too complicated. After an underrun or such, the rate will still be correct, and just the buffer level is off. We can just as well start slowly bringing the level back to the target right away, I don't see any need for waiting.The buffer level measured after start/restart could be kept as working target until correct samplerate adjust was reached (i.e. the buffer level gets stable). Maybe the last known rate adjust could be used which may speed up the this stage after xrun caused by random delayed delivery of chunks (CPU load burst) (the previous adjust was already correct). After this stage the slow ramp of the working target towards the requested target could start.

The sinusoidal rate change is definitely not very realistic. In reality the rates change very little, they tend to stay almost constant, with just some small and very slow drift. The sine is there to test that it does follow these things, and I made it fast (feels weird to call a 150 second period "fast"Just a side note: In your simulations - I wonder if testing the fluctuating true rate adjust reflects reality. IMO the true rate adjust is basically fixed and does not change much in time, for the given input/output devices. What changes systematically is the buffer level (after xruns, delayed chunk delivery, etc.). IIUC in your tests you have the buffer level fluctuating with a random noise, i.e. zero mean. Would it make sense to test the buffer variations to be systematic instead of the true rate adjust variation? IMO it may better reflect what's going on in CDSP.

The buffer level does fluctuate quite randomly at each measurement, so adding the random there is a decent model. I added the step to test the buffer underrun case, this was missing from the first runs.

This is a bit of a problem yes. It's possible to leave the target level out and use a default value. That should maybe be the most common, and only users with special requirements should define a target level. This would then mostly be a documentation change.I am thinking about the user-configurable target level. It does not define the overall latency, users typically do not understand what it does (it unavoidably is quite complex ) and sometimes set it suboptimally. I understand that there must be some working target for the control algorithm, the question is how it's defined.

Hmm dunno.. I definitely see how it could be useful, but my gut feeling is that this would be quite difficult and really time consuming to get right, especially considering that the different audio apis work in quite different ways.But what could be very useful is support for defining/maintaining overall latency. E.g. CDSP would be configured to run with 20ms (to give enough safety margin) overall latency capture buffer -> processing -> playback buffer.

Chunksize is also important for the convolution, to get a good compromise between latency and cpu load for long filters. You would probably need to choose, to either specify a latency, or a chunksize.* Chunksize could be defined by user, as is now (later maybe set automatically to fit the required latency optimally)

Hello, I'm reaching out to see if anyone might have a solution to the issues I am having with camilladsp.

I use Fusiondsp/camilladsp on RPI4 streaming from Volumio.

I need to do more complex dsp pipeline than possible in Fusiondsp, so I turn on Camilladsp GUI

I have various test configs that share some common issues:

1. Whan using shortcuts to select a configuration, I have noticed that I must press the config selection button twice to get the dsp loaded. The first press shows the config was loaded and activated with the two check boxes "all saved" and "all applied", and has the name of the config at the top of the config panel. But it's only when I press the config selection a second time do I hear the changes.

2. In the config panel, sometimes the config changes to "no config selected" without me initiating a change.

3. Sometimes, when switching configs, usually on the second press, the music plays in slowed down audio, like slow motion. When this happens, I have to re-start the song to recover.

4. When a song finishes, the dsp status shoews "stalled" rather than something such as "ready". I'm not sure if this reflects an isse.

5. The log file does not show any useful errors

Thats the background, now the meat.

4. Using gain on the mixers works e.g. -5db. However, the mute functions, both on the source and the destination do not work, and neither does it work in the Gain filter Songs play normally even though one channel is muted.

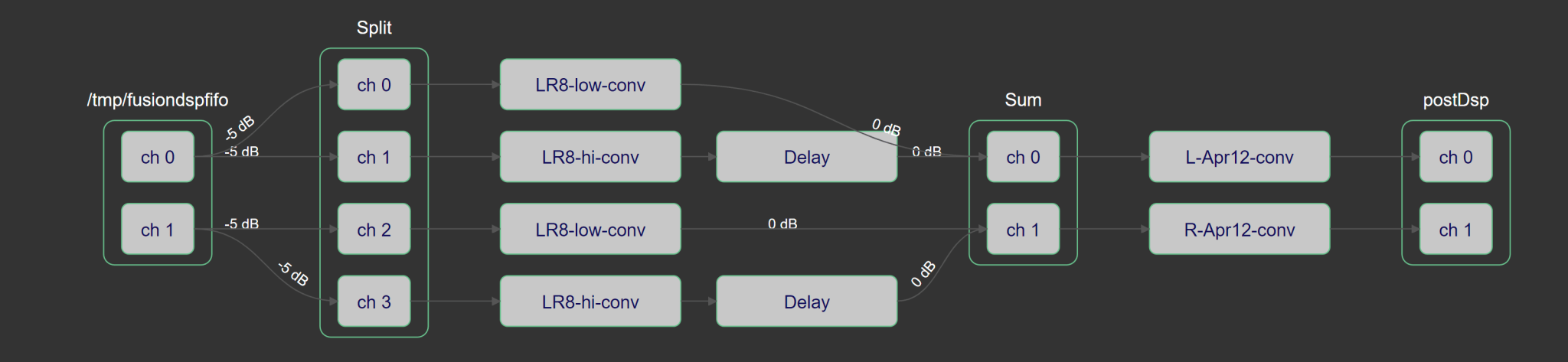

5. Here is a typical pipeline

Thank you in advance for any assistance. I'm stuck at the moment in implementing time alignment with convolution EQ from Rephase.

I use Fusiondsp/camilladsp on RPI4 streaming from Volumio.

I need to do more complex dsp pipeline than possible in Fusiondsp, so I turn on Camilladsp GUI

I have various test configs that share some common issues:

1. Whan using shortcuts to select a configuration, I have noticed that I must press the config selection button twice to get the dsp loaded. The first press shows the config was loaded and activated with the two check boxes "all saved" and "all applied", and has the name of the config at the top of the config panel. But it's only when I press the config selection a second time do I hear the changes.

2. In the config panel, sometimes the config changes to "no config selected" without me initiating a change.

3. Sometimes, when switching configs, usually on the second press, the music plays in slowed down audio, like slow motion. When this happens, I have to re-start the song to recover.

4. When a song finishes, the dsp status shoews "stalled" rather than something such as "ready". I'm not sure if this reflects an isse.

5. The log file does not show any useful errors

Thats the background, now the meat.

4. Using gain on the mixers works e.g. -5db. However, the mute functions, both on the source and the destination do not work, and neither does it work in the Gain filter Songs play normally even though one channel is muted.

5. Here is a typical pipeline

Thank you in advance for any assistance. I'm stuck at the moment in implementing time alignment with convolution EQ from Rephase.

These things all depend on how Fusiondsp is implemented. I don't know the details here, can you ask the fusiondsp author?1. Whan using shortcuts to select a configuration, I have noticed that I must press the config selection button twice to get the dsp loaded. The first press shows the config was loaded and activated with the two check boxes "all saved" and "all applied", and has the name of the config at the top of the config panel. But it's only when I press the config selection a second time do I hear the changes.

2. In the config panel, sometimes the config changes to "no config selected" without me initiating a change.

3. Sometimes, when switching configs, usually on the second press, the music plays in slowed down audio, like slow motion. When this happens, I have to re-start the song to recover.

4. When a song finishes, the dsp status shoews "stalled" rather than something such as "ready". I'm not sure if this reflects an isse.

5. The log file does not show any useful errors

There have been a few rare reports of this issue, but I have never managed to reproduce it. Can you attach a complete config file where it happens?4. Using gain on the mixers works e.g. -5db. However, the mute functions, both on the source and the destination do not work, and neither does it work in the Gain filter Songs play normally even though one channel is muted.

OK, makes sense, a slow ramp should do.I think this should not be made too complicated. After an underrun or such, the rate will still be correct, and just the buffer level is off. We can just as well start slowly bringing the level back to the target right away, I don't see any need for waiting.

The sinusoidal rate change is definitely not very realistic. In reality the rates change very little, they tend to stay almost constant, with just some small and very slow drift. The sine is there to test that it does follow these things, and I made it fast (feels weird to call a 150 second period "fast") so that I can see the result without running very long simulations. Too long sims just get annoying to look at, the simulation is quick to finish regardless.

The buffer level does fluctuate quite randomly at each measurement, so adding the random there is a decent model. I added the step to test the buffer underrun case, this was missing from the first runs.

That sounds perfect.This is a bit of a problem yes. It's possible to leave the target level out and use a default value. That should maybe be the most common, and only users with special requirements should define a target level. This would then mostly be a documentation change.

It seems to me that chunksize is similar to the target level - an important parameter with many effects which are quite complex to explain. Overall latency may be easier to grasp, IMO.Chunksize is also important for the convolution, to get a good compromise between latency and cpu load for long filters. You would probably need to choose, to either specify a latency, or a chunksize.

If the target level were calculated based on the overall latency, chunksize could be adjusted as needed for the optimal CPU load and buffer safety. There could be more chunks in the processing-playback channel while waiting for the start/restart, more chunks written to the playback buffer. IMO the chunk size is up to the algorithm, with maximum chunksize limited by the required latency.

Is there an algorithm where smaller chunksize gets better CPU load per sample? It would seem that larger chunksize is always more efficient, but there may be exceptions. But for timing safety/precision more smaller reads/writes may end up better than fewer large ones.

I agree that it would be a major change. On the other hand it could be available only for some backends (those which allow it), and backends implemented step by step.Hmm dunno.. I definitely see how it could be useful, but my gut feeling is that this would be quite difficult and really time consuming to get right, especially considering that the different audio apis work in quite different ways.

Maybe it would not be overly complicated. Every backend has some capture latency -> an additional method for getting optional capture latency. None could mean not-supported.

Resampling + processing latency is already known https://github.com/HEnquist/camilla...77bfea45df8bfba6e22cc1/src/audiodevice.rs#L82

Playback latency is defined by the target level, IIUC.

And the sum of these three is the overall latency, IIUC. Of course sorting out issues at xruns, pauses, would be complex, but maybe not so much.

IMO in many cases the chain could be configured with larger safe buffers, allowing to run on systems with large CPU peaks, if the overall latency were known and could be taken into account within the whole integration. Currently some users need to strive for minimum latency which may not be necessary, if the fixed defined latency were available.

Should you consider adding such feature viable I could give it a try with your next30 branch.

Usually, a larger chunksize is more efficient. But for convolution it's a little trickier. For long filters, with length > chunksize, it's the same as usual. But if it's the other way around, that filter length < chunksize, then a smaller chunksize is likely a bit faster (I have not checked this though). That's probably quite unusual, so it should be pretty safe to assume that larger chunksize gives better performance.Is there an algorithm where smaller chunksize gets better CPU load per sample? It would seem that larger chunksize is always more efficient, but there may be exceptions.

I must admin I'm still a bit skeptical, but it could be worth giving it a shot to see how bad it would actually be.Should you consider adding such feature viable I could give it a try with your next30 branch.

- Home

- Source & Line

- PC Based

- CamillaDSP - Cross-platform IIR and FIR engine for crossovers, room correction etc.